9.6 Two-way repeated measures ANOVA

9.6.1 Introduction

Similar to its one-way counterpart, in a two-way ANOVA we assess the effect of two within-subjects variables on a dependent variable.

The following example is completely fictional, and has no real grounding in theory (as far as I’m aware). It’s intentionally facetious, but hopefully demonstrates the process of doing a two-way repeated measures ANOVA.

9.6.2 Example

If you did this subject in 2023 you would have met Victor and Gloria in the second statistics assignment, who were working with data looking at what predicts high school GPA. They’ve now moved onto a new project about whether listening to happy or sad music may affect exam performance. To do this, they design a nifty little study. They recruit 20 participants and bring them into the lab. This is their procedure:

- Participants come into the lab and do a series of standard maths exams. The maths exams are either easy, medium or hard, and participants are randomly assigned to do either the easy or the hard one first. They are also randomly assigned either happy or sad background music as they do the exam.

- Once they have completed the first exam, they take a 10 minute break and then do another exam that is easy, medium, or hard, and with either happy or soft music in the background.

- This process repeats until they have done all combinations of difficulty and background music.

- Each exam is scored out of 100.

We have two within-subjects independent variables here:

- Difficulty of the exam (3 levels: easy, medium, hard)

- Background music (2 levels: happy or sad)

Every participant therefore does 6 maths exams: easy-happy, easy-sad, medium-happy, medium-sad, hard-happy and hard-sad. The dependent variable of interest is their exam score. We’re interested in whether the difficulty and the music type have an effect on exam performance. Because our two IVs are within-subject variables, we will want to use a two-way repeated measures ANOVA.

## Rows: 120 Columns: 4

## ── Column specification ──────────────────────────────────

## Delimiter: ","

## chr (2): difficulty, music

## dbl (2): ptcpt, grade

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.R will generally treat character variables as factors, but will order the factors alphabetically. In our instance, because we have a specific order for the categories that is not alphabetical and we want our graph to reflect this, we will need to tell R what order our levels are. This is simple to do with the factor() function, which will create factors from columns in your data. factor() first needs to know what column you are wanting to create factors in, and then it will want to know the specific order of the factors. The order is set with the levels argument.

We will recode both the difficulty and the music type variables for completion’s sake, and there are two ways to go about doing this.

First, you can just use base R functions like so:

Or you can use factor() within mutate and largely identical syntax:

twoway_exam <- twoway_exam %>%

mutate(

difficulty = factor(difficulty, levels = c("easy", "medium", "hard"))

)Let’s start with the usual descriptives and graphs:

## `summarise()` has grouped output by 'difficulty'. You can

## override using the `.groups` argument.

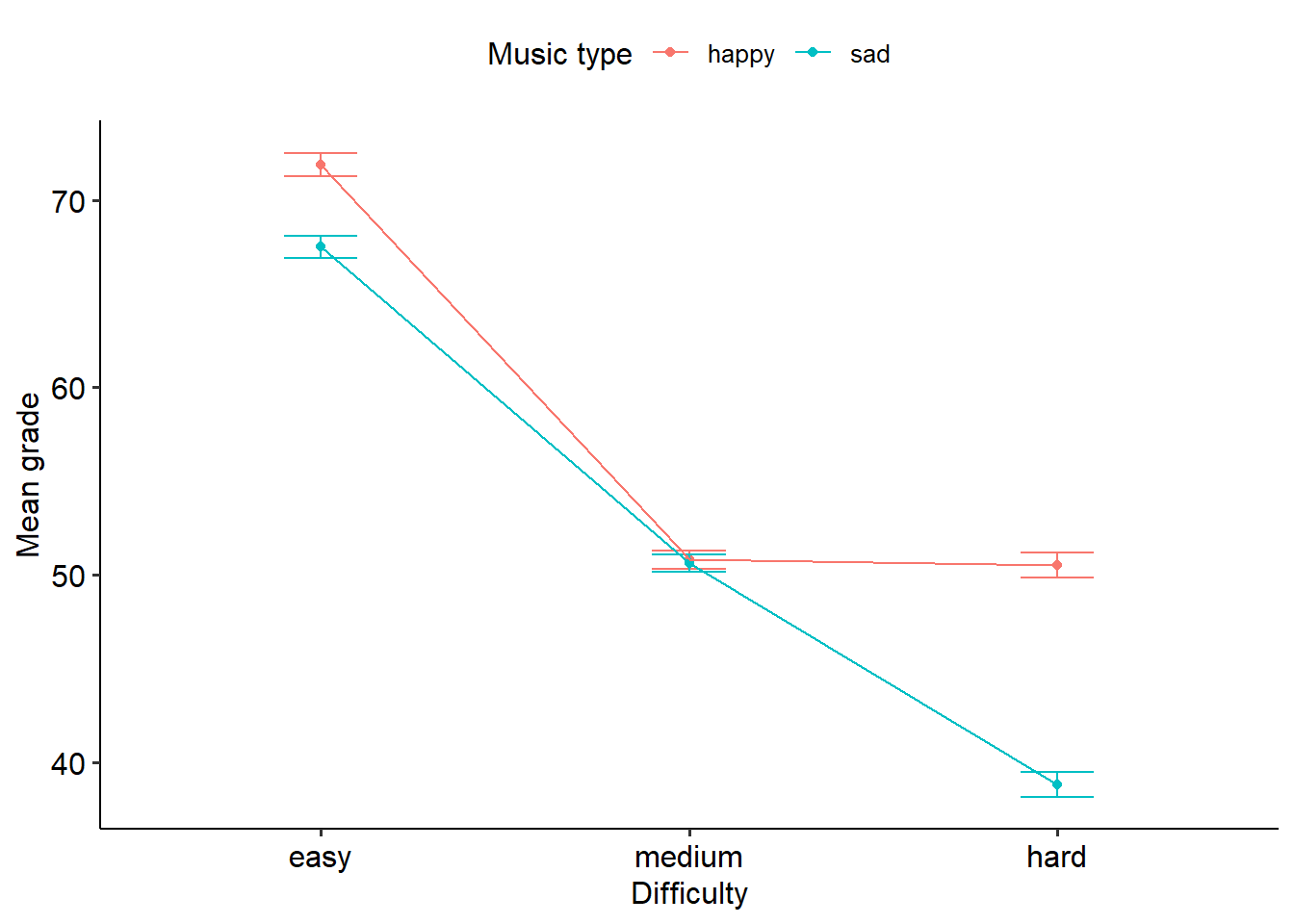

Eyeballing the graph, we can see that there might be some sort of effect happening. Unsurprisingly, it looks like the easy maths exams are, well. easier, because people are scoring better on them. There might be an effect of music, because in both easy and hard conditions it looks like people do better with happy music compared to sad music. But the interaction plot tells us there’s something clearly going on, and it paints a really interesting story on its own. It appears that there’s almost a ‘plateau’-ing effect with medium difficulty, in that both happy and sad hit the same point in exam scores. However, it looks like for happy music, harder exams see no further decrease in performance - but there is a sharp drop again for sad music.

9.6.3 Assumptions

The assumptions for a two-way RM ANOVA is the same as a one-way:

The data from the conditions should be normally distributed. (More specifically, the residuals should be normally distributed.)

The data for each subject should be independent of every other subject.

Sphericity must be met.

As is the case with one-way repeated measures ANOVAs, the assumption of sphericity is only tested when there are three or more levels; with only two levels, the assumption is always met. The output is below with the main ANOVA output. The sphericity for all of our effects is intact, so we don’t have any issues here, but if we did the same principle would apply - we would apply our corrections depending on the value of epsilon.

Let’s also see if our variables are normally distributed:

R-Note: For a repeated measures ANOVA, for some reason the closest I can get to generating what Jamovi does is to build an aov() model without an explicit Error() term - as would be the case for a fully between-subjects ANOVA. You can then use the standardised residuals to create a Q-Q plot using the below code. Note that broom:augment() is just a helper function that nicely extracts the residuals:

aov(grade ~ difficulty * music, data = twoway_exam) %>%

broom::augment() %>%

ggplot(aes(sample = .std.resid)) +

geom_qq() +

geom_qq_line()

It’s not… great but for the purposes of demonstration, we’ll run with it anyway.

9.6.4 Output

Here’s our output from R:

twoway_exam_aov <- aov_ez(

data = twoway_exam,

id = "ptcpt",

dv = "grade",

within = c("difficulty", "music"),

anova_table = list(es = "pes"),

include_aov = TRUE

)

twoway_exam_aov## Anova Table (Type 3 tests)

##

## Response: grade

## Effect df MSE F pes p.value

## 1 difficulty 1.79, 33.92 40.38 189.61 *** .909 <.001

## 2 music 1, 19 48.16 18.39 *** .492 <.001

## 3 difficulty:music 1.65, 31.36 39.89 10.29 *** .351 <.001

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '+' 0.1 ' ' 1

##

## Sphericity correction method: GG##

## Univariate Type III Repeated-Measures ANOVA Assuming Sphericity

##

## Sum Sq num Df Error SS den Df F value Pr(>F)

## (Intercept) 363220 1 511.30 19 13497.322 < 2.2e-16 ***

## difficulty 13668 2 1369.60 38 189.613 < 2.2e-16 ***

## music 886 1 915.03 19 18.390 0.0003968 ***

## difficulty:music 677 2 1251.07 38 10.286 0.0002691 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

##

## Mauchly Tests for Sphericity

##

## Test statistic p-value

## difficulty 0.87972 0.31556

## difficulty:music 0.78826 0.11750

##

##

## Greenhouse-Geisser and Huynh-Feldt Corrections

## for Departure from Sphericity

##

## GG eps Pr(>F[GG])

## difficulty 0.89263 < 2.2e-16 ***

## difficulty:music 0.82526 0.0007226 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## HF eps Pr(>F[HF])

## difficulty 0.9789636 4.104076e-20

## difficulty:music 0.8936983 4.903345e-04Once again this is quite a busy table! We can see that there is a significant main effect of difficulty (F(2, 38) = 189.61, p < .001), as well as a significant main effect of music type (F(1, 19) = 18.39, p < .001). There is also a significant interaction effect of difficulty and music (F(2, 38) = 10.29, p < .001). We can also see our sphericity output here; neither the difficulty variable (W = .880) nor the difficulty x music interaction (W = .788) terms show significant violation of sphericity (p > .05). Therefore we have not corrected for anything in our main ANOVA output. Note that because music only has two levels, there is no sphericity test for it.

To decompose this, we’ll do our usual simple effects tests - holding one variable constant and running pairwise comparisons with the other. For this example, we’ll just hold the difficulty constant and compare music genres. We can absolutely reverse the simple effects, but for the sake of simplicity we’ll just do it the one way round for this example.

## difficulty = easy:

## contrast estimate SE df lower.CL upper.CL t.ratio p.value

## happy - sad 4.4 1.78 19 0.682 8.12 2.477 0.0228

##

## difficulty = medium:

## contrast estimate SE df lower.CL upper.CL t.ratio p.value

## happy - sad 0.2 1.58 19 -3.097 3.50 0.127 0.9003

##

## difficulty = hard:

## contrast estimate SE df lower.CL upper.CL t.ratio p.value

## happy - sad 11.7 2.40 19 6.675 16.72 4.873 0.0001

##

## Confidence level used: 0.95From this we can see that for easy exams, on average people scored 4.4 points higher with happy music compared to sad music (p = .023). On medium exams, there was no difference between happy and sad music (p = .900). Lastly, for harder exams people who listened to happy music scored, on average, 11.7 points higher than people who listened to sad music (p < .001). This suggests overall that happy music is probably better for completing exams than sad music, though the medium difficulty exam is a strange one here.

If, instead, we wanted to hold music type constant and test differences in difficulty, we would get this output.

## music = happy:

## contrast estimate SE df lower.CL upper.CL t.ratio p.value

## easy - medium 21.1 1.76 19 17.42 24.78 12.005 <.0001

## easy - hard 21.4 2.20 19 16.79 26.01 9.718 <.0001

## medium - hard 0.3 1.72 19 -3.29 3.89 0.175 0.8630

##

## music = sad:

## contrast estimate SE df lower.CL upper.CL t.ratio p.value

## easy - medium 16.9 1.69 19 13.36 20.44 9.989 <.0001

## easy - hard 28.7 1.98 19 24.55 32.85 14.483 <.0001

## medium - hard 11.8 1.74 19 8.16 15.44 6.791 <.0001

##

## Confidence level used: 0.95This paints a much more interesting picture about what is going on, and might be a more interesting way of decomposing the interaction. But remember - only do one or the other!